How Evolving FPGA Architectures Will Transform Machine Vision Applications

CPUs process instructions sequentially, while the multiple cores within GPUs perform parallel computations. FPGAs take parallelism to an entirely new level.

Recent advances in FPGA architecture — particularly the integration of dedicated AI processing elements — are reshaping the possibilities for future machine vision applications. Understanding how these devices handle image processing can help vision engineers identify opportunities where this established though fast-advancing technology might outperform traditional approaches.

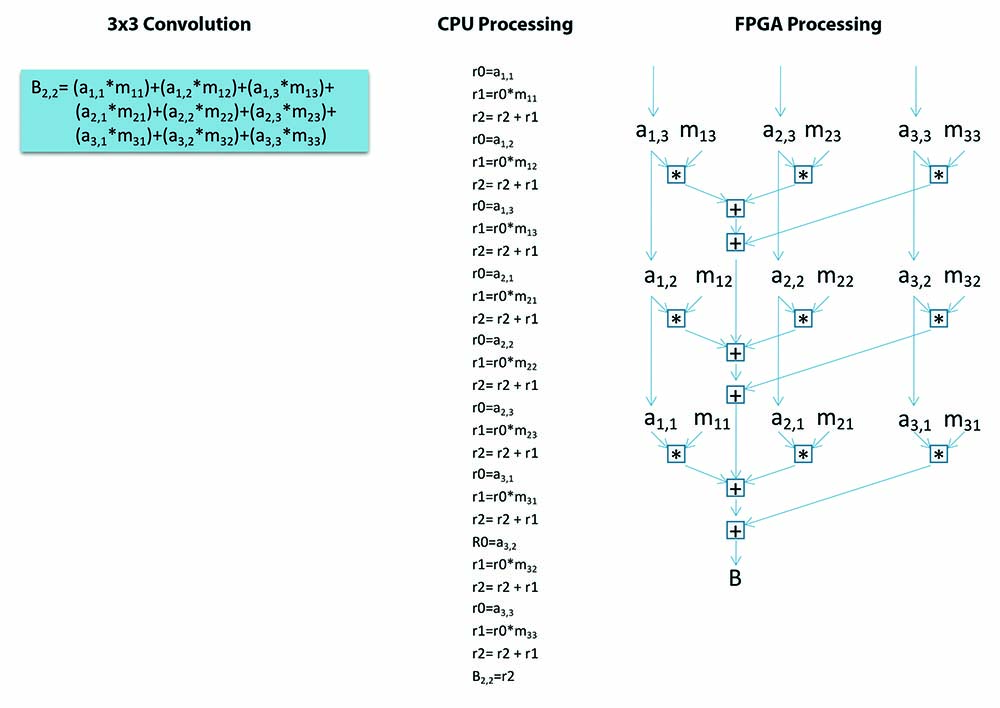

In the 3 x 3 convolution shown here, 9 pixels are multiplied by filter coefficients and added together. As convolutions involve more pixels, the compute requirements stack up quickly. For example, a 7 x 7 convolution would involve 49 multiply-add functions, which is essentially 100 operations per pixel. That puts a lot of strain on a CPU.

The Pipeline Advantage

Image processing often involves operations like convolution filters, where the brightness of each pixel is converted into a computed value based on the output of neighboring pixels. A typical 3 × 3 convolution requires nine operations per pixel. This task is straightforward enough for a CPU handling a few pixels. Things quickly get more computationally intensive, however, well before the chip needs to process millions of pixels at video rates.

GPUs are traditionally the preferred solution as the number of convolutions multiply — and there is ample power. FPGAs have also evolved to efficiently compute thousands of operations in parallel on chip. Rather than relying on a set number of cores, however, FPGAs pass data from one compute element to another in a pipelined fashion.

The approach resembles an assembly line where each stage handles a different part of the calculation simultaneously. It allows FPGAs to process image data more efficiently. The convolution is fixed, and data is passed through the pipeline, which permits one or more results per clock cycle. The efficiency becomes more pronounced as operations become more complex, which makes FPGAs a power-efficient alternative versus GPUs. Additionally, the architectural advantages of FPGAs enable multicamera systems to handle high-bandwidth data streams and process frames without the bottlenecks in external memory transfers that GPU-based systems often face.

The Cutting Edge

Recent FPGA developments have specifically targeted vision and AI workloads. Modern devices now integrate traditional programmable logic with dedicated AI processing tiles designed for inference operations. These AI blocks can handle neural network computations while the FPGA fabric simultaneously manages sensor interfaces, preprocessing, and system control.

Such hybrid architectures underlie new system designs emerging on the market. One platform, for example, combines FPGA logic for real-time sensor processing, dedicated AI cores for classification tasks, and embedded ARM processors for system management — all on a single chip. Such integration optimizes task distribution: Low-latency operations run in the FPGA fabric, AI inference uses the specialized tiles, and general computing happens on embedded processors.

The implications for FPGA performance are significant. An FPGA with 1600 digital signal processor (DSP) ASIC cells can run at 250 MHz, which equates with 400 GOPS — significantly more processing power than traditional multicore CPUs.

Or consider processing operations to improve image quality, which might examine a 7 × 7pixel window to enhance each output pixel. This requires about 100 operations per pixel, or 200 million operations per HD image. At 30 frames per second, that's 6 GOPS. Though such a workload would quickly overwhelm the capacities of a single CPU, FPGAs can perform the job in real time. Further, by assigning an FPGA to improve image quality, the CPU remains free to run the full operating system, handle user interfaces, manage low-speed sensors and actuators, and maintain secure cloud connections.

Importantly, while CPU-based systems must compete with operating system tasks and memory management overhead, FPGA processing occurs with deterministic latency. This ability is critical for machine vision applications that require fixed response times or precise synchronization of data from multiple sensors.

FPGAs are not a panacea. CPUs, GPUs, or some combination thereof, are still suitable for many machine vision and edge AI applications. However, as demand grows for more intensive compute, the low-latency, deterministic responsiveness, and embedded AI capabilities of FPGA-based solutions will continue to bridge performance gaps for today’s most challenging vision tasks.

Concurrent EDA specializes in creating high-performance FPGA designs directly from customer software. If you would like to learn more about how FPGAs can drive advances in your high-speed, high-data-rate machine vision applications, reach out to set up a meeting here.

Distributed by Concurrent EDA, LLC

Pricing, Availability and Ordering

Email